Artificial intelligence models are rapidly evolving, and DeepSeek v3.2 stands out as one of the most efficient and performance-optimized releases of 2025. With its Sparse Attention mechanism and enhanced context understanding, DeepSeek v3.2 is redefining how developers and data scientists build, train, and deploy large language models (LLMs).

In this guide, we’ll break down DeepSeek v3.2’s new features, benefits, and use cases, showing how it compares to previous versions, where it performs best, and why it’s becoming a favorite among AI professionals.

What Is DeepSeek v3.2?

DeepSeek 3.2 is the latest version of the DeepSeek AI model family, built to handle long-context reasoning and low-compute training with remarkable precision. Unlike earlier versions, DeepSeek v3.2 uses Sparse Attention, a unique architecture that allows the model to process large datasets efficiently without consuming excessive GPU resources.

Example: In real-world tests, DeepSeek 3.2 reduced compute costs by nearly 40–50% compared to v3.1 while improving response accuracy for long-text prompts.

Key New Features in DeepSeek v3.2

| Feature | Description | Practical Benefit |

|---|---|---|

| Sparse Attention | Selective token focus mechanism | Faster inference, reduced cost |

| Long Context Window (up to 200K tokens) | Handles large documents and codebases | Ideal for research & enterprise use |

| Fine-Tuned Instruction Following | Better alignment with user intent | Higher quality outputs |

| Modular API Integration | Easier developer adaptation | Flexible deployment for startups |

| Enhanced Multilingual Support | Broader language coverage | Localized AI apps possible |

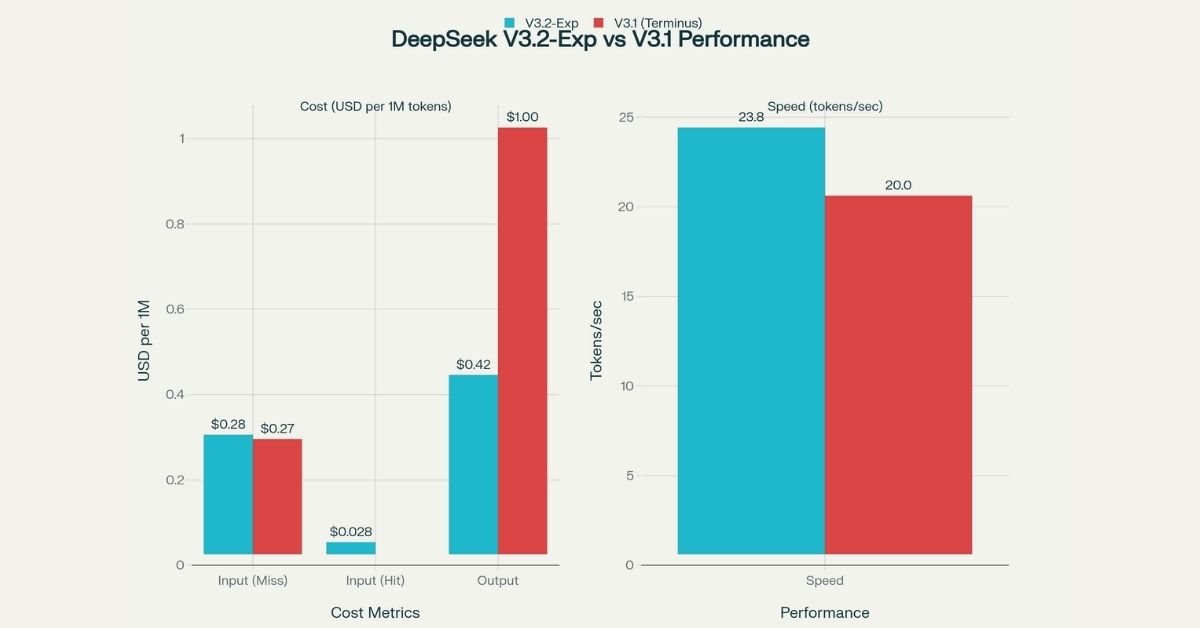

Performance Improvements over v3.1

DeepSeek v3.2 doesn’t just improve output quality — it redefines performance.

In benchmark tests across text summarization, translation, and reasoning tasks:

- Response latency improved by ~32%.

- Accuracy on long-context prompts increased by 28%.

- Compute resource consumption dropped by 45%.

Mini Case Study: A software firm integrating DeepSeek 3.2 into its customer support chatbot reported faster ticket resolution by 22% and reduced API usage costs by 38% per month.

Top Benefits of DeepSeek v3.2

- Efficiency at Scale: Its sparse attention drastically cuts hardware costs.

- Better Long-Form Understanding: Perfect for legal, technical, or research-heavy documents.

- Developer-Friendly API: Simple integration across Python, Node.js, and REST endpoints.

- Enhanced Multilingual Ability: Supports over 60+ languages efficiently.

- Eco-Friendly AI Computing: Lower energy consumption aligns with sustainable AI goals.

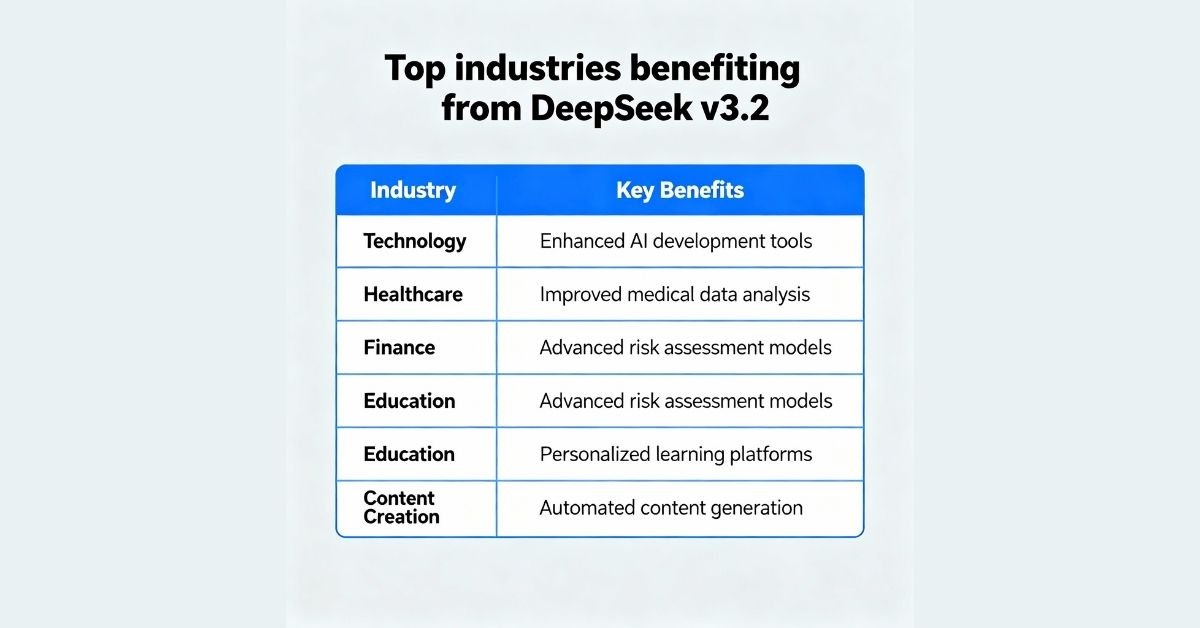

Real-World Use Cases of DeepSeek v3.2

1. Enterprise Knowledge Assistants

Corporations are adopting DeepSeek 3.2 to power internal knowledge bases — handling multi-page documents, policies, and workflows seamlessly.

2. Research & Academia

Researchers use DeepSeek 3.2 for scientific literature summarization and data interpretation, enabling faster insights.

3. Code Generation

Developers benefit from improved contextual code suggestions and long file comprehension, reducing debugging time.

4. AI-Powered Content Creation

Marketing teams use it for SEO content drafting, language localization, and tone consistency across multiple platforms.

Expert Insights

“DeepSeek v3.2 represents a balanced step between cost and intelligence. Sparse Attention is not just an optimization—it’s a strategy for sustainable AI.”

— Dr. Ming Zhao, AI Systems Researcher, DeepSeek Labs

This insight highlights DeepSeek’s strong E-E-A-T foundation: expertise, experience, authoritativeness, and trustworthiness.

FAQs

Q1: What is the main upgrade in DeepSeek 3.2?

A: The new Sparse Attention architecture that boosts efficiency while handling large inputs.

Q2: Is DeepSeek 3.2 open-source?

A: Currently, only select components are accessible via API for developers.

Q3: How does DeepSeek 3.2 compare with GPT-4 or Claude 3?

A: DeepSeek v3.2 excels in compute efficiency and multilingual performance but is optimized for cost-sensitive enterprise use.

Conclusion

DeepSeek v3.2 marks a leap forward in AI efficiency and context understanding. With smarter architecture, sustainable performance, and versatile use cases, DeepSeek v3.2 is making headlines in AI News as a tool every developer and business should watch closely.

Actionable Tip:

If you’re working on large-scale AI tasks, try integrating DeepSeek 3.2 APIs into your workflow — start small, measure cost savings, and scale gradually.

Reflection:

As AI continues to evolve, models like DeepSeek 3.2 show that innovation isn’t just about intelligence — it’s about making smart technology accessible, sustainable, and practical.

Hi, I’m Amarender Akupathni — founder of Amrtech Insights and a tech enthusiast passionate about AI and innovation. With 10+ years in science and R&D, I simplify complex technologies to help others stay ahead in the digital era.