To be honest, AI driven termination decisions may be fast, but they are often flawed. HR professionals relying on algorithms to determine who stays or goes risk creating workplaces that feel cold, unfair, and more focused on data than people.

Yes, technology does promise to be fair. But do AI-driven termination decisions actually make things fair? Understanding the significance of this issue for the AI community is crucial.

Understanding the significance of this issue for the AI community is crucial.

Curious about how AI compares to human experts in processing complex knowledge? Check out our analysis in AI-Powered Student vs PhD Scholar: Who Will Win the Knowledge Race? to see how AI stacks up against human expertise in learning and problem-solving.

The use of AI-driven termination decisions isn’t just an HR experiment; it’s a societal experiment. Companies place people’s jobs in the hands of algorithms, assuming robots can’t be biased. Yet we’ve seen AI inherit prejudice from biased data, whether in employment, policing, or credit scoring.

The AI community has a duty in this case. When computers select who to fire, the effects are instant and life-changing. Employees are not simply “data points”; they are people with jobs, families, and expenses to pay.

Therefore, the question arises: Can we truly rely on AI to make decisions about terminating employees given the significant risks involved?

Main Risks of AI in Firing Employees:

AI can show old biases in HR data.

Algorithms could unfairly go after women, minorities, or other groups.

Automated choices make people less caring and less able to make beneficial selections.

People are regarded as “data points” instead of people.

When AI makes a mistake, it might change your life right now.

The Argument for AI Driven Termination Decisions in HR

Let’s be honest. HR departments are using these tools for a purpose. AI-driven termination decisions can look at trends that people might overlook. For instance, they can point out employees who have been consistently underperforming, notice trends in absenteeism, or show employees who have been disengaged for lengthy periods of time.

For organizations that have to lay off workers, automation makes it easier for managers to deal with their feelings. They rely on “objective” facts instead of one leader making harsh decisions.

Being efficient and consistent is important. But do the potential benefits of AI-driven termination decisions outweigh the dangers?

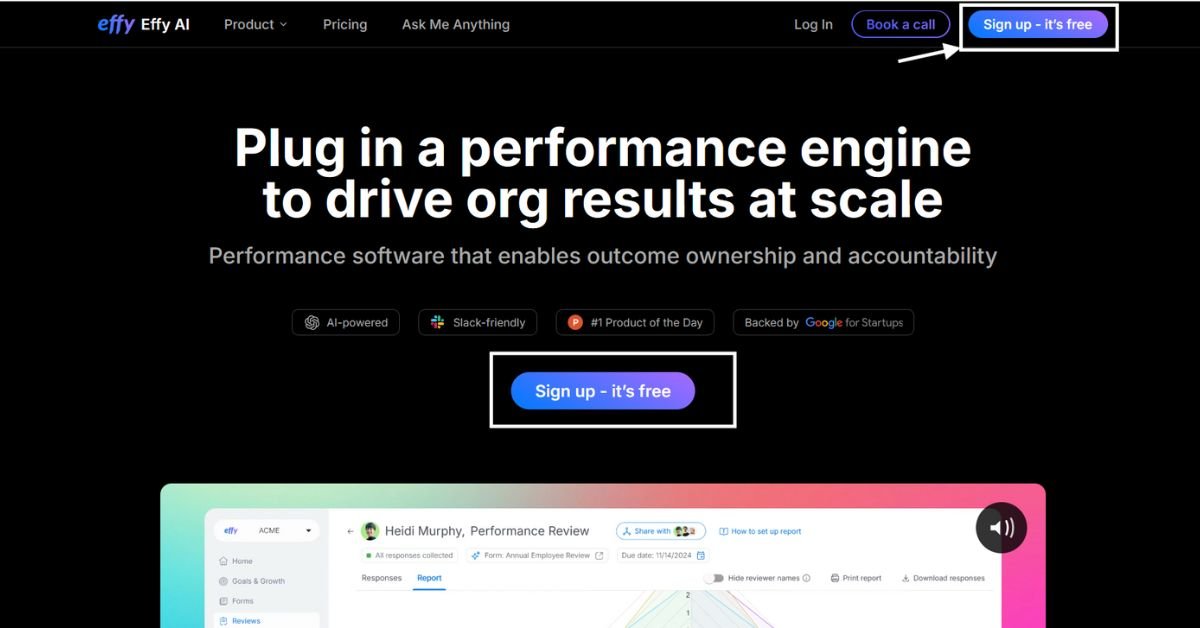

Companies are already using a number of AI-powered HR technologies to help them make these choices. For instance, Effy AI, 15Five, and Lattice assist in keeping track of performance data and showing patterns in disengagement.

Note: Screenshots shown in this section are from Effy AI, used here purely for illustration of how AI tools support HR processes. The blog does not endorse this software, and similar functionality may exist in other HR tools.

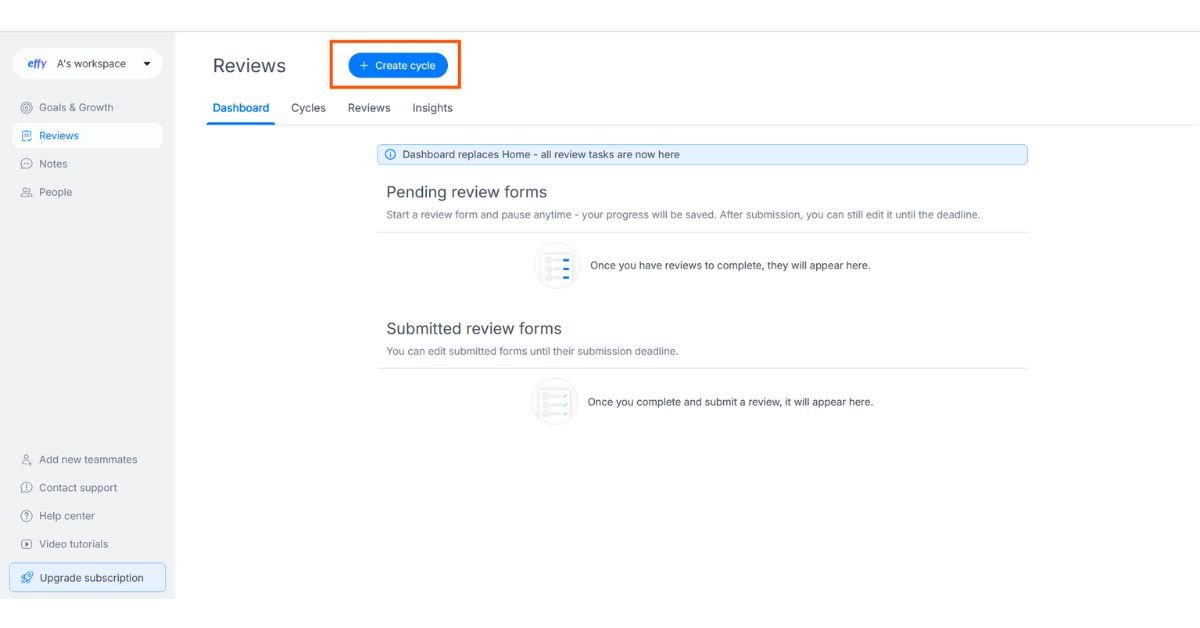

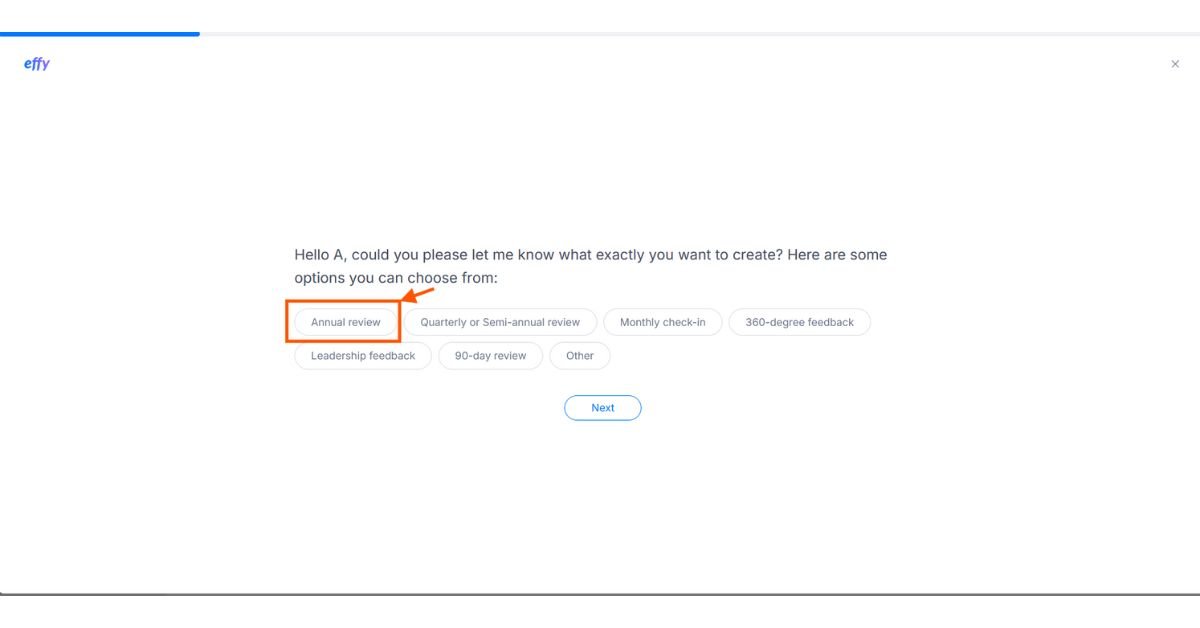

This is the main interface for Effy AI. HR managers may start a new performance evaluation cycle from here. The UI is clear and meant to help users through each stage. This step is where the road to using AI to manage performance starts.

It’s easy to start reviews using Effy AI. By choosing “Create Cycle,” managers can initiate structured assessment processes with minimal preparation required. This makes HR jobs less by hand and more consistent.

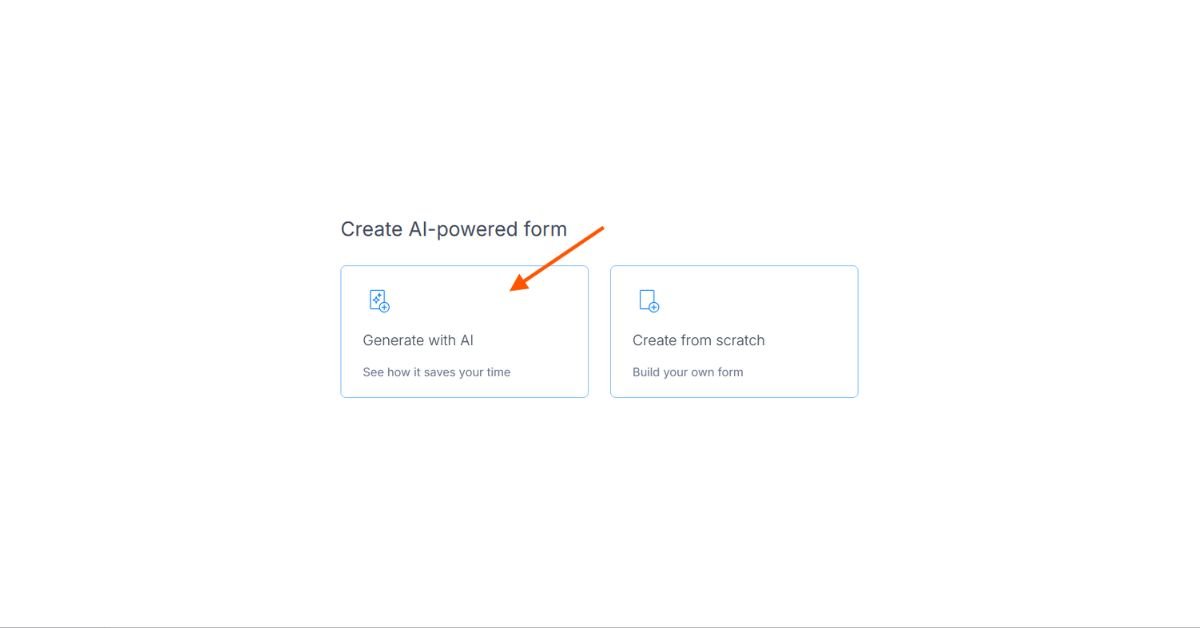

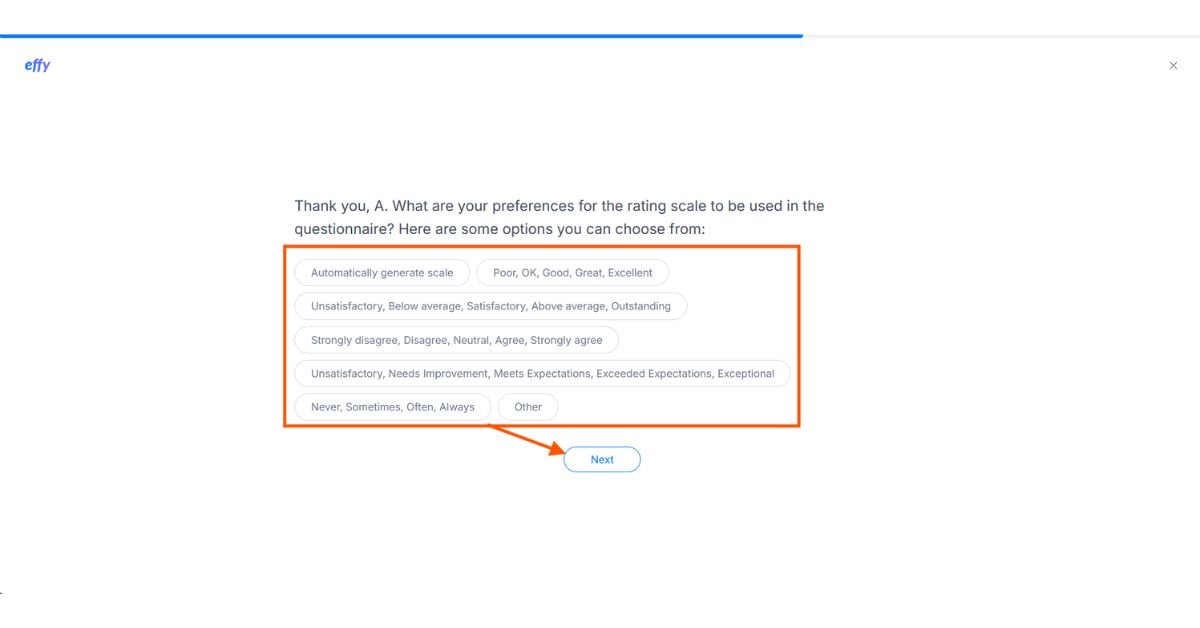

Here, Effy AI gives you options. Managers may either let AI make a performance evaluation form for them or make one from scratch. This hybrid option strikes a compromise between automation and human customization.

HR departments may pick the style of evaluation that works best for their company, such as yearly reviews or 360° feedback. This kind of flexibility lets businesses tailor performance management to their needs instead of using a one-size-fits-all methodology.

How AI can help HR:

AI monitors the level of engagement and productivity among employees.

It identifies employees who are not performing effectively or showing interest in their work at an early stage.

It provides well-organized and consistent evaluation data.

It assists managers in making decisions grounded in facts rather than emotions.

It enhances the efficiency of HR work by automating repetitive tasks.

This screenshot shows the reach and engagement of my LinkedIn post “HR Shocking Secrets.” It received 30 impressions and reached 21 members, highlighting the interest of professionals in HR and AI topics. Sharing insights on AI-driven HR decisions can spark meaningful discussions. You can view the original post [here](HR’s Shocking Secret is OUT!).

The Problems with Algorithmic Firings

Hidden bias is a major problem with AI-driven termination decisions. Algorithms learn from historical data shaped by human prejudice. The AI records how past supervisors ranked women lower or penalized minorities for not fitting the culture.

Look at Amazon. Their hiring algorithm used to lower the score of resumes that had the phrase “women’s” in them. Why? Because the algorithm had been trained on recruiting data that was mostly male for decades.

If you want to protect your own digital footprint while sharing insights online, learn how to prevent platforms like LinkedIn from using your content to train AI in our guide: How to Stop LinkedIn from Using Your Posts to Train AI

Imagine applying the same flawed reasoning in AI-driven termination decisions. The system may spot “patterns” tied to prejudice, causing employees to lose their jobs even when performing well.

Would you want an automated system to evaluate your job performance?

Some programs, like Onwards HR, go even farther. They have an “Adverse Impact Analysis” option that looks at layoffs. This may seem fair, but it still depends on how good the data is, and biased data may quickly change the conclusions.

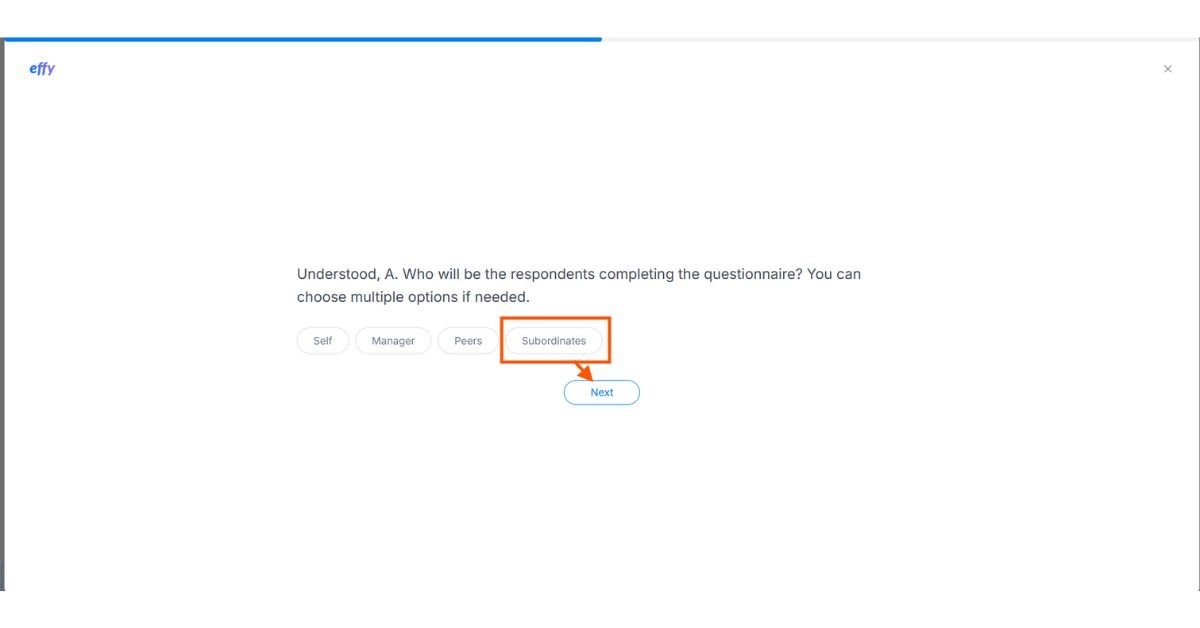

Not just managers can do performance evaluations. With Effy AI, you may get feedback from many different people, including colleagues, subordinates, and even yourself. This gives you a better, more complete picture of how well your employees are doing.

AI’s Limitations in Making Firing Decisions:

There is no such thing as objectivity; AI picks up on human prejudices.

No algorithm can fully grasp the situations of employees.

People typically don’t think about cultural, linguistic, and personal backgrounds.

In delicate situations, AI can’t replace human empathy.

Too much dependence on AI might hurt morale at work.

A Real Story: AI Driven Termination Decisions in a Call Center

I formerly worked as a consultant for a contact center that was using AI to make choices about firing people. The system told managers to lay off staff who had “low customer sentiment scores.” It seemed fair on paper.

But when we looked closer, we saw that AI-driven termination decisions punished workers who spoke English with strong accents. Customers gave lower ratings-not due to poor service, but prejudice. The AI reflected this bias and labeled these workers as “underperformers.”

What happened? People who were good at their jobs and worked hard almost lost their jobs over something they couldn’t help.

This approach is not effective. This is a code that discriminates.

HR managers can change things like deadlines, anonymity, and assessment criteria at the last phase. Once set up, the review cycle is ready for action. This level of oversight makes sure that AI helps HR make decisions instead of taking over.

The Illusion of Being Objective

Many CEOs claim that AI-driven termination decisions are fairer than managers’ subjective judgments. But here’s the catch: true objectivity doesn’t exist.

- People make algorithms. History shapes data. Leaders choose the metrics. There is prejudice at every level.

- It’s like putting up a home on unstable ground. The foundation is bad, no matter how nice the design is.

HireVue and Knockri are two hiring tools that say they may help make hiring choices more objective by looking at interviews and candidate answers. But these technologies have also made people worry about bias, revealing that “objective” systems frequently reflect human bias.

The Human Cost of AI Driven Termination Decisions

There’s another issue beyond bias: dignity. Losing a job is one of life’s most distressing experiences. Employees feel less human when AI-driven termination decisions replace face-to-face interactions.

- Imagine receiving an email stating, “Your employment has been concluded due to performance data.”

- There is no explanation provided. No feelings. Just numbers.

- Does it fit with what firms say they stand for?

Leena AI and other AI helpers currently send HR communications through chatbots and other automated methods. These kinds of methods may be effective, but they run the danger of taking away the respect and understanding that employees expect during delicate talks.

The Other Side: Would it be possible to Fix Bias?

Some say that improved data and clear design can help us address these problems. They recommend checking algorithms, retraining models, and making sure there is a variety of input.

And certainly, these actions are important. Responsible AI practices can help make AI-driven termination decisions less biased. But let’s be honest. Regardless of the quality of its creation, AI remains incapable of comprehending the intricacies of human life.

Humu and other platforms, on the other hand, focus on the positive side of AI by giving individualized “nudges” to improve performance, retention, and morale. These technologies prove that AI can help workers, but they can’t replace real human understanding.

It’s not just numbers that show performance. AI-driven termination decisions can’t account for context, teamwork, resilience, or growth. How can a model understand what someone is going through during a pandemic? Could it grasp the struggles of a single mother working late at night?

- No dataset can completely represent the human experience.

- In my opinion, AI should assist rather than make decisions.

This is where I stand: AI-based choices on firing people should never be final. AI may help HR by finding trends, giving insights, and pointing out hazards. But people must make the final choice.

People are empathetic. They can ask questions, figure out what things mean, and think about things that aren’t physical. But machines can’t.

I’d rather trust a boss who makes mistakes but cares than an algorithm that works perfectly but is cold.

What’s Next for AI Driven Termination Decisions?

The AI community has to be obvious about what it stands for. If businesses utilize AI to make choices about firing people, they must:

- Make sure employees know how algorithms rate them.

- Conduct Bias Audits-regularly review for any unfair outcomes.

- Keep People in Charge-People should always be involved in making final choices.

- Give Employees the Right to Appeal-They should be able to fairly challenge the results of algorithms.

If these protections aren’t in place, AI-driven termination decisions might turn workplaces into digital factories where people stop caring about each other.

Best Ways to Use AI in HR:

Let individuals make the ultimate decisions.

Do frequent checks for bias on AI tools.

Tell workers how AI ranks their work.

Allow workers to contest decisions made by AI.

AI should assist humans in making decisions, not replace them.

Last Thoughts

Technology should make work better, not worse. AI-driven choices to fire people may appear efficient, but they take away fairness and compassion.

As AI specialists, HR executives, and workers, we need to ask ourselves: do we want machines to decide our future? Do we want companies where technology helps people instead of taking their place?

I think the solution is obvious. AI should only be used to help make judgments about firing people, not to judge, jury, or execute them.

People are not just numbers, though. They are tales, problems, and hopes. And no algorithm should ever tell them how much they’re worth.

Disclaimer: The screenshots displayed here are provided solely as examples. They were taken from Effy AI’s public interfaces and altered in Canva to show how they work. This blog is not an official representation or endorsement of Effy AI. The goal is only to teach people how AI-powered HR products work.

Last updated on August 24, 2025

Hi, I’m Amarender Akupathni — founder of Amrtech Insights and a tech enthusiast passionate about AI and innovation. With 10+ years in science and R&D, I simplify complex technologies to help others stay ahead in the digital era.