Many people who are interested in AI have lately started using HeyGen AI to make realistic films rapidly. However, many consumers are frightened due to alarming rumors about a potential data breach involving HeyGen AI. Think about spending hours working on a project, only to realize that your private data are out in the open. That’s the most important issue we need to deal with right now.

Many people who are interested in AI have lately started using HeyGen AI to make realistic films rapidly. However, alarming reports of a HeyGen AI data breach have left users concerned. If you’re exploring other AI video tools, check out our guide on 6 Best AI Video Generators to Easily Create Videos from ChatGPT Prompts

HeyGen AI and other AI systems that leak data hurt privacy, intellectual property, and confidence. It may be catastrophic if someone gets access to your material, passwords, or personal information. Before uploading sensitive information, AI users and developers need to know about the HeyGen AI data leak.

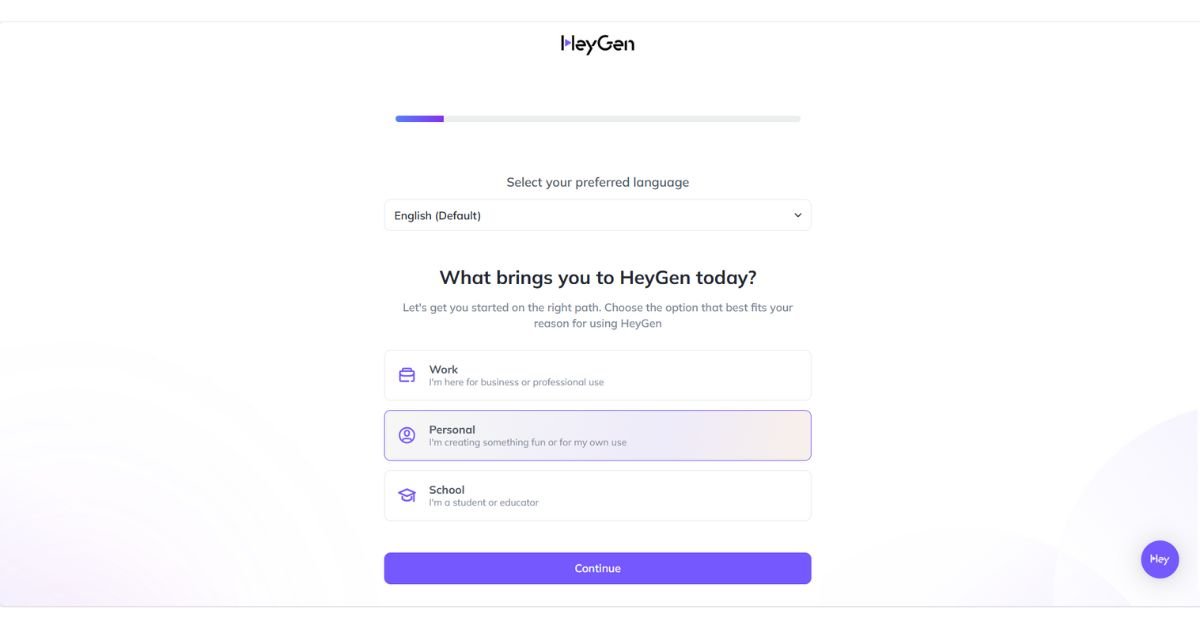

The app’s interface shows how easily users log in and start generating content. It also illustrates how personal data enters the system.

Shows the app’s UI to those who are using it for the first time.

It demonstrates the ease of logging in.

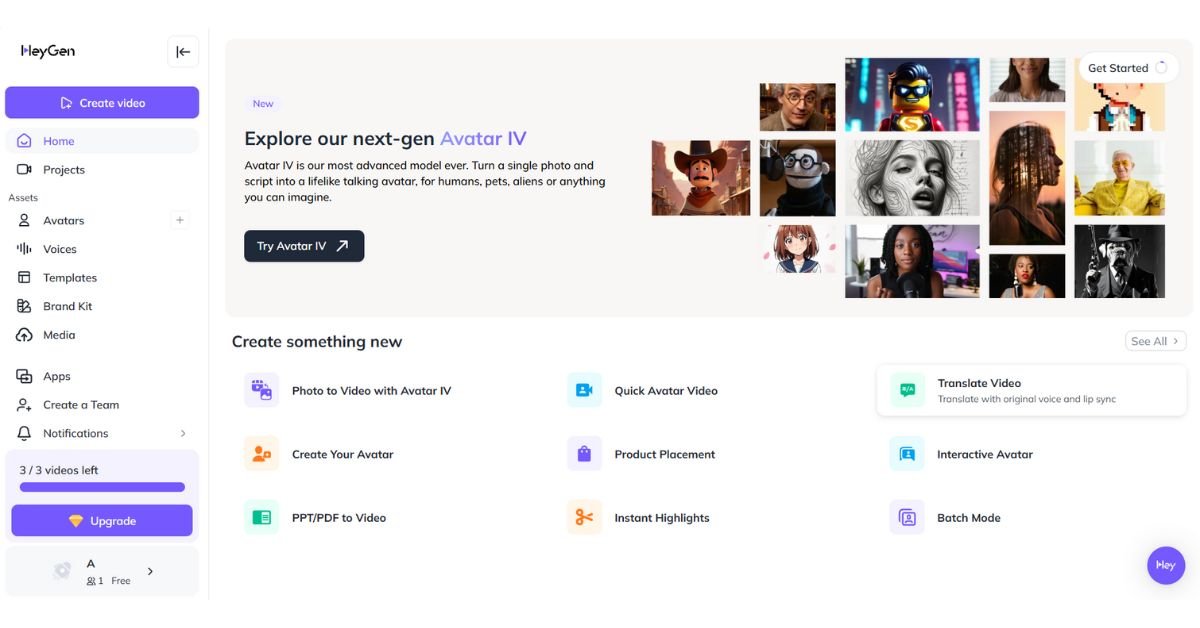

It also provides users with an overview of the available AI tools for content creation.

It provides a clear understanding of how user data is incorporated into the system.

It also emphasizes the importance of ensuring data security on AI platforms.

It can emphasize the types of data that are uploaded when you check in.

HeyGen AI Data Leak Is the Problem

In plain terms, there was a breach at HeyGen AI that may have exposed user data, including movies, pictures, and account information that had been posted.

- A lot of the time, users weren’t aware that other people could see their data.

- AI-generated material, which might be sensitive at times, was in danger.

- Developers may have exchanged datasets with private information without knowing it.

User awareness is a wider problem than simply this breach. Many people think that AI products like HeyGen AI are completely safe. Ignoring this could lead to data loss, legal issues, and a bad reputation.

Mention metadata in videos/images, credentials, and avatar data.

In plain terms, there was a breach at HeyGen AI that may have exposed user data, including videos, images, and account information. Even metadata embedded in AI-generated avatars can potentially reveal personal information.

Why It’s Important to Solve This

If you don’t pay attention to the security threats of HeyGen AI, you could:

Ignoring HeyGen AI privacy issues can lead to loss of intellectual property, exposure of sensitive work or personal data, and reduced trust in AI video generation tools.

- You could experience a loss of sensitive material or intellectual property.

- Revealing private or business information

- Less faith in AI tools and platforms

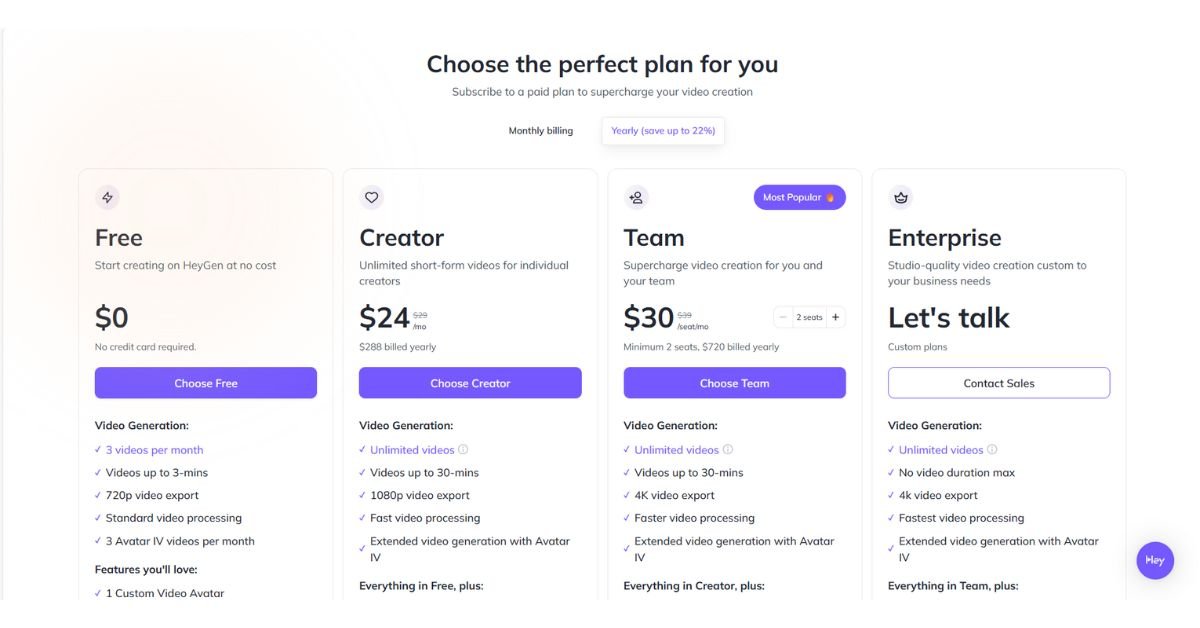

Different subscriptions can affect data storage and access, which may influence exposure risk.

There are many levels of subscriptions and features.

Point out that pricier services can let you store more data or provide you access to more powerful capabilities.

Link to the risk of exposing data.

Helps readers figure out which people are most affected by breaches.

Encourages careful use depending on the elements of the plan.

Encourages conversation about the privacy practices of different plans.

Fixing the HeyGen AI data breach helps users get back in charge, keep working on AI projects safely, and set higher security standards for future workflows.

How to Protect Yourself Step-by-Step

When utilizing HeyGen AI or a comparable AI platform, here is a useful way to protect your data:

1. Limit the number of sensitive data uploads.

- Don’t submit private pictures, business logos, or secret movies.

- For testing, use data that has been anonymized or set aside.

- For example, a marketing team changed client branding to generic photos before testing HeyGen AI to lower the danger of exposure.

Step 1 (Limit uploads): add “Tip: Avoid uploading client videos; test with dummy clips.”

Users enter both personal and work information.

Shows what kinds of information might be leaked in a breach.

Directly related to the advice to “limit uploads.”

It illustrates the process by which information is incorporated into the AI system.

Increases knowledge of the dangers to privacy.

Can explain why it’s okay to use anonymized data for testing.

Consider using offline AI tools when working with sensitive projects. For more detailed guidance on automating AI workflows, including video generation for YouTube, see our step-by-step guide on Automate YouTube Videos with AI

2. Turn on security features.

- If you can, turn on two-factor authentication (2FA).

- Change your passwords often and keep an eye on your account activities.

- Use a password manager to make strong, unique passwords for HeyGen AI and other AI platforms.

3. Look at the rules of privacy.

- Learn how HeyGen AI keeps, uses, and distributes your information.

- Check to see if the material is still there when the project is done.

- Revisions to a privacy policy might give you critical information about how long your data will be stored and if it will be shared with other parties.

4. Use local or offline options.

- Think about using AI technologies that don’t require an internet connection to cut down on online exposure.

- This is quite helpful for tasks that need to stay private.

- Data point: Research shows that offline AI solutions like HeyGen AI are up to 70% less likely to leak information than cloud-based technologies.

Research shows that offline AI solutions are up to 70% less likely to leak information than cloud-based platforms. See this AI Security Study by Cybersecurit

Be cautious with batch or interactive videos, as these involve more data. Consider offline alternatives when working with sensitive content.

Includes a number of functions, such as turning text into video, turning photos into video, and making avatars.

Shows actions that have a lot of data that might be exposed.

Helps people comprehend how big the platform is.

Helps people talk about solutions that are not online and those that are.

Shows why it’s important to be careful with delicate tasks.

Shows possible dangers of employing batch and interactive video features.

5. Monitor for breach alerts.

- Keep up with AI communities and security alerts.

- If a vulnerability is found, act right away.

- For example, users who responded quickly after the HeyGen AI data breach were able to limit the potential damage.

Even metadata in AI-generated avatars can reveal personal information. Always check the platform’s privacy policy, such as HeyGen AI Privacy Policy

Keep up with HeyGen AI security alerts and AI community updates. Responding quickly can prevent potential data exposure.

6. Regularly maintain backups and audit trails.

- Make sure you have local copies of critical projects.

- Keep track of anything you post to HeyGen AI and other AI tools.

- Best Practice: Regular audits make workflows safer and help find any vulnerability early.

Problems and advice.

There may be some problems with putting these suggestions into action:

- People want all of the AI features, but they would rather not limit uploads. Tip: Use fake material to test projects initially.

- AI platforms that run on the cloud are easy to use, but they also make you more vulnerable. Tip: Find a balance between safety and speed.

- Policies around privacy might be difficult to understand. Tip: Pay attention to how long data is kept, how it might be shared, and how to opt out.

- People want all the AI features, but they would rather not limit submissions; therefore, utilize fake content first.

- AI systems that are on the cloud are easy to use but also dangerous. Find a balance between ease of use and privacy.

Conclusion: Do Something Before It’s Too Late

The HeyGen AI data breach shows how rapidly AI systems may reveal private information. You can safeguard your projects by limiting uploads, implementing security measures, monitoring breaches, and implementing offline alternatives.

Thinking about these steps, knowledge is vital. HeyGen AI and other powerful AI platforms aren’t perfect, though. You may securely look into AI without putting your data at risk if you take steps ahead of time.

Take Action: Look over your AI workflow now. Check your uploads, change your security settings, and discuss best practices with your team. Taking action now will keep your initiatives secure and ready for the future.

Today, go over your HeyGen AI workflow, check your uploads, change your security settings, and share best practices with your team. These procedures make AI video creation safer and keep your data protected from possible hacks.

Disclaimer: The pictures and examples in this blog are just meant to teach and show. No sensitive user information has been shared, and all pictures have been changed or made anonymous to keep people’s privacy safe. There is no suggestion in the blog that it is connected to or supports HeyGen AI.

Last updated on August 23, 2025

Hi, I’m Amarender Akupathni — founder of Amrtech Insights and a tech enthusiast passionate about AI and innovation. With 10+ years in science and R&D, I simplify complex technologies to help others stay ahead in the digital era.